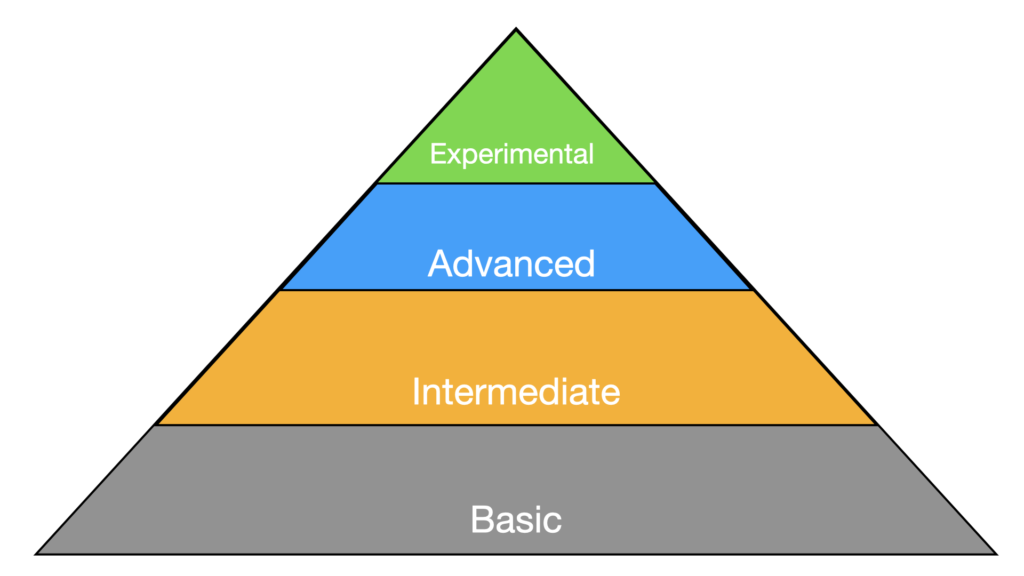

In our first post on this topic, we introduced the Hierarchy of Security Needs and tied it to psychology and why we thought it fit nicely in a discussion of security maturity. The TL;DR of that post is that it is handy to have a model for how foundational a particular security tool, function, control might be - and that by being honest with ourselves about this, we can do a better job building a security program. If you want to know where to start, you might want to go back and look at the Basics from the first post. In this post, we dive into levels 2 and 3 of the hierarchy and talk about the tools people are using there.

This post talks about the middle of the pyramid. Remember from the first post, many vendors will tell you they are at the bottom of the pyramid because that means you need them sooner and more deeply!

Intermediate - Important and foundational but maybe a bit more complex to implement than the basic level controls. An example: SSO.

Advanced - Legitimately better for security but potentially hard to do or more may be in more of an early adopter phase. An example: Yubikey.

We defined intermediate level tools as important or foundational for security but potentially more work to implement or higher complexity. They are well understood and widely believed to be important. They are not experimental.

Single Sign On (SSO) - Using single sign on helps us to maintain one list of current users and one core set of credentials - hopefully leveraging multi factor authentication (MFA) as well. Using SSO usually means that if a user leaves, we can remove them from one place and that will cascade to effectively remove them from all of the other systems they had access to. This reduces errors and oversights related to privileges never expiring. We recommend using SSO for all email, financial and cloud hosting systems where it is supported. It is often tricky to do it all so it can often be helpful to make a backlog and just take on one or two each week or month depending on your bandwidth.

Asset Discovery and Management - A common refrain among security experts these days is that "you can't protect what you don't know that you have." An asset is a laptop, or a server, or a company controlled phone, or a software system - even in the cloud. You could think of data as an asset too, but that tends to complicate the discussion a lot. Asset discovery is the idea that you can accumulate an inventory of your assets by watching interactions, network connections, logins, etc. There are some more advanced asset management capabilities but at the core, being able to at least know the following could be a good start:

Network Segmentation - Segmentation is basically another term for hard separation between different networks. In an office, you might want to have separate networks for HR / Finance / Software Engineering. If you run any of your own corporate infrastructure or online services in your own network, these should certainly be segmented from the rest of your network. In a simple case, that essentially means that we should not allow network traffic between the production area and the office area.

Log Aggregation - Collecting logs from across your organization is essential to both detecting issues and doing investigations of issues. Ideally, you should have event logs from systems and firewall logs and other tool based logs and application level logs all going to a central log aggregation service that allows for effective query and exploration.

Software Dependency Checks - When you build software, you almost always use libraries that are open source or are written for a third party. For any of these, it is critical to know when you need an update and to have a process for doing that. Many package managers (eg. npm) have the idea of auditing built in - so you can quickly identify and upgrade your dependencies to address an inherited gap.

Source Code Management (SCM) - Platforms and tools git and svn allow us to keep track of the version of software that we are deploying to production. It is essential that the exact version of code that is running in production is captured in some sort of SCM system. Ideally, there should be additional controls around how code is updated and managed - but having an SCM is the most basic baseline.

Change Management Processes - In some organizations, changes just flow out as they happen. This can be nice early on, but eventually two changes will conflict or some change will surprise a stakeholder and you will want to start to control what changes. Thankfully, with modern SCM and automated deployments we can use protected branches and clear gates for accepting pull requests (PR's) as the backbone of the change control process.

Vendor Management For Sub Processors - This item is a bit overloaded and covers two main concepts - but it is for a reason. First, we need to know who our key sub-processors are. This is any system that our system is built upon, where the operators of that system might theoretically have access to some of the data in our system. (Ideally, this list will be short - ours include AWS and Heroku) Once you know who your sub-processors are, you should step back and make sure they meet your security and privacy requirements. In the longer run, you'll want to do this for all of your vendors - but initially and to focus, (hence the consolidated item) it is helpful to at least look at sub-processors.

Penetration TestingPenetration testing, also known as pen testing, is a security assessment method that simulates a real-world attack to identify vulnerabilities in a system, application, or network. Penetration tests are conducted by ethical hackers who attempt to exploit weaknesses in a system's security defenses using a variety of tools and techniques. The goal of a penetration test is to identify vulnerabilities and provide recommendations for remediation before they can be exploited by malicious actors. - Eventually you will want to do an ethical hacking exercise called a pentest. This is a very basic and common exercise that many standards and audits require. We have a deeper dive on how to procure a pentest over here, but this is a proven and essential part of even a moderately robust security program.

Vulnerability Scanning - Vulnerability scanning is even more basic than pentesting - it involves probing a network for devices that have known vulnerabilities. This is also a very common and basic requirement and something you probably want to build into your program earlier than later.

Cloud Based Backups for Cloud Storage and Databases - If you use RDS or any type of cloud based storage (S3), you should probably make sure you have backups turned on and accessible. This is essential for BCP/DR planning.

Advanced security needs still address important issues, but they are usually more complicated to implement, require interactions or integrations and may be still in the early adoption phase of a security product lifecycle.

Note that the Advanced name is not intended to necessarily mean "technically advanced". It means it would take an advanced organization to run them and we typically see them in higher maturity organizations.

YubiKey / FIDO2 - While this technology isn't as widespread as it could be, it is widely supported in browsers (WebAuthN) and allows for biometric + browser based controls to access credentials that both protect the secrets and ensure they don't get entered on a lookalike URL.

Cloud Security Assurance - Often we build things very quickly in the cloud and rarely do we go back to make sure we followed good practices. Sometimes when we first build things, those good practices aren't even widely known - or they may not exist! In any case, a tool that automatically helps review your cloud environment for security issues can be a good way to quickly and effectively identify and mitigate risks.

Security Code Review - Code review is an advanced offering but it can help to identify certain classes of security issues even more effectively than tooling or pentesting. If you have a serious need for security (eg. fintech or blockchain), a code review might be a really good idea.

Comprehensive Vendor Management - In a more advanced setting, we need deeper and more comprehensive vendor management. This may include actually auditing some vendors and it certainly includes keeping a complete inventory and applying data sensitivity tier appropriate criteria for evaluating risk associated with each vendor.

Zero Trust"Zero Trust" is a security model that assumes no implicit trust of any user or device attempting to access a network or system, whether inside or outside the network perimeter. This means that every user, device, and application must be authenticated and authorized before being granted access, and access is continuously monitored and validated based on various factors such as device posture, user behavior, and the sensitivity of the resource being accessed. - Zero trust is an established but not necessarily widely used (or at least not effectively used widely) technology that should be considered for implementation. Particularly, for use accessing production data it can be a very effective deterrent for most types of compromises. The technology works but there is still quite a lot of consolidation and standardization that is happening here.

Security Incident and Event Monitoring (SIEM) - A SIEM builds on central logging or log aggregation by adding detection and rules to the central platform. These tools can be noisy and painful but they are very commonly deployed in moderate to advanced environments. Note that to effectively use a SIEM you may also need a SOCService Organization Controls (SOC) are a set of standards developed by the American Institute of Certified Public Accountants (AICPA) to help organizations assess and report on the effectiveness of their internal controls. SOC reports provide assurance to customers and stakeholders that service organizations have appropriate controls in place to protect sensitive data and assets. (Security Operations Center) with analysts that handle events.

Bug Bounty - Bug bounty programs provide a way to harness anonymous security researchers for evaluating your platform. This has a benefit of both early notice and potentially controlling the dissemination of information. However, while company's that do Bug Bounty programs are established your mileage may vary and to be effective you will need to have staff to engage with researchers.

User AuditA cyber security audit is an independent evaluation of an organization's information systems, policies, and procedures to assess their compliance with relevant security standards and regulations. The audit typically involves a thorough review of the organization's security and risk management processes, and incident response plans, as well as an assessment of its ability to prevent, detect, and respond to cyber threats. and Review - Any company that is serious about security needs to have a way to audit user access to different systems. Often platforms and SSO providers make this easier but there are almost always exceptions.

Mobile Device Management (MDM) - MDM solutions provide a way to ensure that only devices that are known, with certain configurations, can be used for company business. Although BYOD is common in startups, we see everyone adopting some sort of company provided device coupled with MDM as the numbers grow to 25-50.

Web Application FirewallA Web Application Firewall (WAF) is a type of cybersecurity technology designed to protect web applications from a variety of attacks, such as SQL injection, cross-site scripting (XSS), and other common web-based threats. A WAF sits between the web application and the internet, monitoring and analyzing incoming traffic in real-time. It can detect and block malicious traffic before it reaches the web application, providing an additional layer of defense against cyber attacks. WAFs can be implemented on-premises or through a cloud-based service and can be configured to suit the specific security needs of the web application they protect. WAF is often required by security standards such as PCI-DSS. (WAF) - A web application firewall inspects layer 7 (http) traffic and can detect and/or block certain types of attacks. WAF is an established technology with limitations. Given the ease with which a WAF can be implemented ($20/month and a DNS change) it is hard to argue against having this in place.

Intrusion Detection SystemIDS (Intrusion Detection System) is a cybersecurity technology that monitors network traffic and systems for signs of malicious activity. IDS solutions use various techniques such as signature-based detection, anomaly detection, and behavioral analysis to identify patterns of unauthorized or unusual behavior. (IDS) - An intrusion detection system can run at a host, on a network or in a cloud based environment. Lately, we recommend using IDS that is provided by your cloud provider because it is by far the most straightforward to set up and run. In larger companies, it can make sense to have dedicated teams operating SIEM and IDS, which often work together.

Dynamic Application SecurityApplication security is the protection of software applications from cyber threats and vulnerabilities. Policies are established to guide the development and deployment of applications in a secure manner. Procedures are created to detail the steps necessary to secure applications and to ensure that policies are consistently followed. Training is provided to developers and other personnel to ensure that they understand the policies and procedures and are able to apply them effectively. By implementing policies, procedures, and training in SPIO, organizations can reduce the risk of cyber attacks on their applications and protect sensitive data from theft or damage. Testing (DAST) - DAST is like network scanning but it is done at an application level. DAST scans can identify some sorts of security flaws in a web application - and with OWASP ZAP and a variety of open and free choices, it is a good idea to collect this information before your adversaries do. Unauthenticated DAST scanning can be low yield, but it is a standard tool in the set and does routinely find interesting things.

The goal of this post was to elaborate on the Intermediate and Advanced levels in our Security Hierarchy of Needs. Is there something you think should be in a different tier? We'd love to hear from you!