The OWASP Top 10 has changed the way I think about security as a developer. It has had a huge impact on the industry and remains one of the most important set of guiding principles developers can put their hands on. It is also misused a lot, as we have written about for years. For the easiest possible example, there are no tools that can test for the Top 10 but that doesn't stop companies from claiming that they can!

Over the past 10 years we have seen a proliferation of Top 10 Lists, for Mobile, for API, for LLMs. I think it is time to take a step back and talk again about what the Top 10 is for and how to make it really great. I want to give a nod to Jim Manico and John Steven who talked about flaws in the OWASP Top 10 in their memorable 2017 AppSec USA Keynote.

This post talks about some ways to move forward and make these lists more effective for developers. This is not the first and will not be the last post about Top 10 lists, but I think it offers a unique perspective. The TL;DR is we need to think about the developer consuming the list more.

I remember my first AppSec EU. The behind the scenes drama was that someone was manipulating the Mobile Top 10 to include things that their toolset could best find. Since the committee running the process were all volunteers, this was difficult to manage. Who knew what the truth was? Honestly, I'm still not sure.

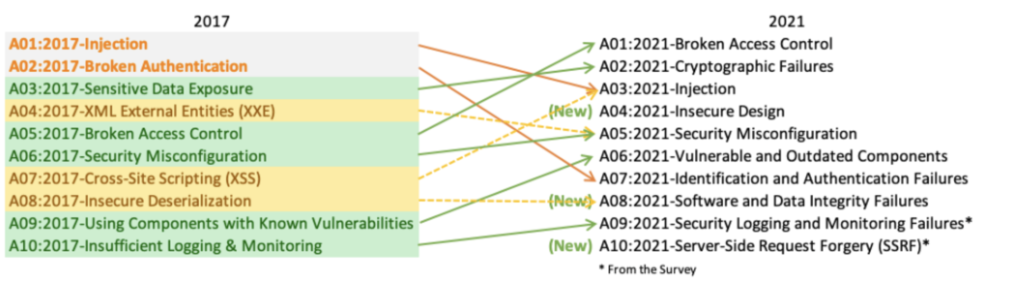

The main Top 10 had already started working hard to gather data and start normalizing it to make the Top 10 more data driven. A large part of this was wanting to avoid the appearance of favoring vendors. I really think this work was tireless and produced a great improvement in the top 10 list. This was for the 2017 Top 10. A good document about this is this a presentation from the Cambridge chapter of OWASP given by David Johannson with background from Andrew van der Stock.

Since 2017, I think the efforts to make the Top 10 more data driven have been awesome and reflect a commitment to the broader community that really only OWASP has been able to achieve over the years. Having said that, I think the 2021 list has a bunch of practical flaws. Every list ever is flawed but there is a sort of directional drift problem that I think is worth calling out for discussion.

I give OWASP Top 10 TrainingIn the context of cybersecurity, training refers to educating employees, contractors, and other stakeholders about security best practices and policies. This can include training on how to recognize and avoid common phishing and social engineering attacks, how to create strong passwords and use multi-factor authentication, how to handle sensitive data, and how to respond to security incidents. Effective training programs are ongoing and can help organizations reduce the risk of human error and improve overall security posture. fairly often. A common comment from people is that they liked the 2017 Top 10 better because it was clearer what each thing was.

The OWASP Top 10 as presented at: https://owasp.org/www-project-top-ten/

There are a number of confusing parts of the 2021 Top 10:

I think one of the challenges of building a Top 10 list is that you need to balance the level of abstraction and decide what you are including based on what the audience is using the list for. The 2021 OWASP Top 10 List, which is still the best list we have right now, is mixing metaphors with some very specific vulnerability classes (eg. SSRF) and other more general ideas (Insecure Design). Note that Jim Manico and John Steven's argument about the OWASP Top 10 was that they may not be Vulnerabilities at all! So what are they? Should security Top 10 List items be Risks? Vulnerabilities? Common Pitfalls? I think the answer is that we're framing the whole question wrong when we think about them in security terms. To make them more effective, maybe we need to think of them from a developers perspective. When a developer sees a Top 10 list, they want to know for each 1 of the 10 items if they are doing it or not and if not how. This process should take just a few minutes for each of the 10 items. Maybe it should just be a todo list containing the Top 10 things to do!?

As an exercise, imagine if there were two different OWASP Top 10 lists.

Stepping back, I think one reason the OWASP Top 10 is so popular is that it seems to capture a bunch of the most important security problems in one quick place. With older versions of the Top 10, that was probably more true! It may even be why the OWASP Top 10 was included as a specific call out in the PCI-DSS standard, which brought it kicking and streaming into the mainstream as a requirement for applications that handled credit card data.

So if you are a developer and you need a checklist of 10 things not to do, which is more helpful:

I can identify vectors to check for SQL Injection and take concrete actions to prevent it.

Insecure design is like a graduate level course in software development that doesn't immediately tell me ANYTHING I need to do. A reader is left with the sense that they will never be able to meet this expectation. Don't get me wrong here, I think the abstracted list is critically important - but maybe for different reasons. I find that the abstracted list is more useful if you are studying application security itself and maybe developing new ideas or attacks. Conceptually, if you understand design level issues, you have a good basis for thinking about security in a different context.

So what if ... one Top 10 list were the practical things you need to protect from and the other focused on the abstractions. Of course, this gets way more complicated when you start to think about how even the OWASP Top 10 are really different for different languages.

IEEE has a paper called "AVOIDING THE TOP 10 SOFTWARE SECURITY DESIGN FLAWS" and it has some of the same problems. At a glance, is "Earn or Give, but Never Assume Trust" really different from "Authorize After You Authenticate"? Are these even definitively the best Top 10 flaws? Some are quite good and this type of list stands up to the test of time in many ways.

There are also Top 10 lists for:

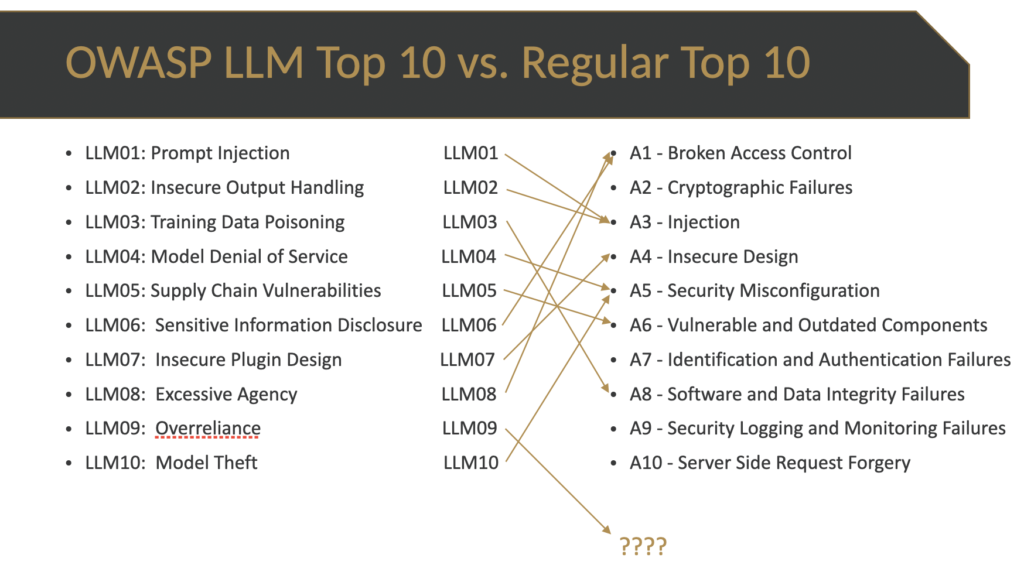

My observation from these lists is that many of the items repeat what the main OWASP Top 10 says. Consider the LLM Top 10 for instance:

I put a slide in my training about how this is not really that different from the OWASP Top 10.

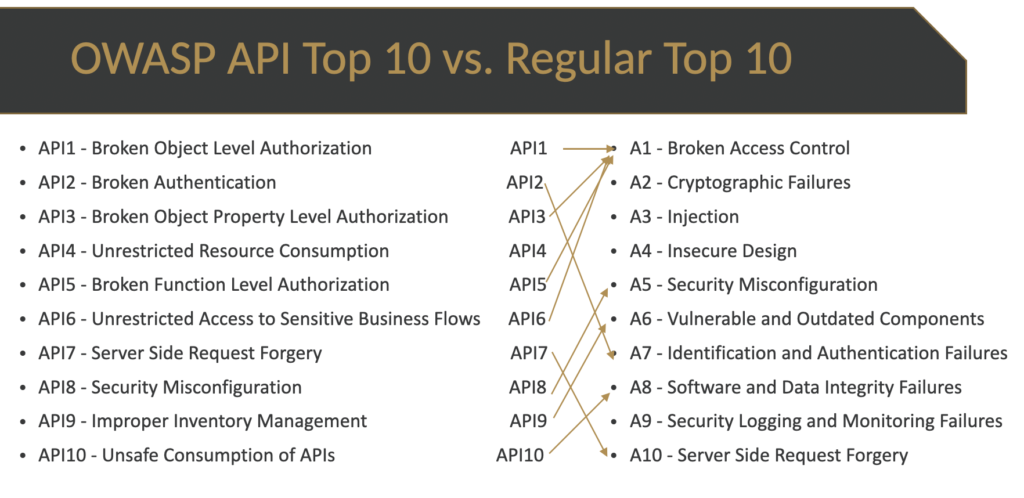

The same is true for the API Top 10.

What I am trying to say (through these visual examples) is that the more detailed Top 10 basically aggregate into the regular Top 10 if you let them. So if you allow them to be too abstract, then they seem redundant or even conflicting with the other lists.

As shown in the previous section, without a clearer set of guidelines about the level of consistency and abstraction of a particular Top 10 list, we end up with lists that overlap and seem ambiguous.

My instinct is to focus on one Abstract Top 10 List (maybe the main OWASP Top 10) and a number of topical Detailed Top 10 Lists. It should be clear that you need both!

You know you have a good item for the Abstract Top 10 List if it captures a rollup of items from other detailed lists. In other words, if identifying sensitive data is relevant to Mobile, Web App, API and LLMs then maybe it should be in the abstract standard.

On the other hand, I think developers really want fine grained actionable detail in the Detailed Top 10 Lists. Here are some specific examples:

A key question to ask is: can the developer take a specific action based on each item in the Top 10?

I want to start by saying I think everyone working on these lists within OWASP (and IEEE) are doing an amazing job and having a real impact on developers. They are awesome leaders that are creating something positive. I do not want any of what I am saying here to take away from that at all.

My goal in writing this is to spur improvements or clarifications in the processes we use to make the Top 10 to make sure that they are as useful and applicable as possible.