Today I was looking back for my blog posts about security in the SDLC from 2012-2016 and I realized that I had never migrated them forward to the new website when we updated. Whoops! So … in this post I want to recap in some detail what I’ve learned about security in the SDLC.

Nearly every company we work with has a different version of how they build software. Some are more Waterfally, others are more Agile. Some are even DevOpsy. Even within these broad categories, there is always variation. At most mid and large sized companies, there are effectively more than one variation of SDLC being used.

For these reasons, when we build a model for integrating security into a company’s SDLC, one critical step is to stop and understand the SDLC itself. I like to draw pictures at both a unit of work level and an overall process level. We’ll present two then circle back and dive into the details.

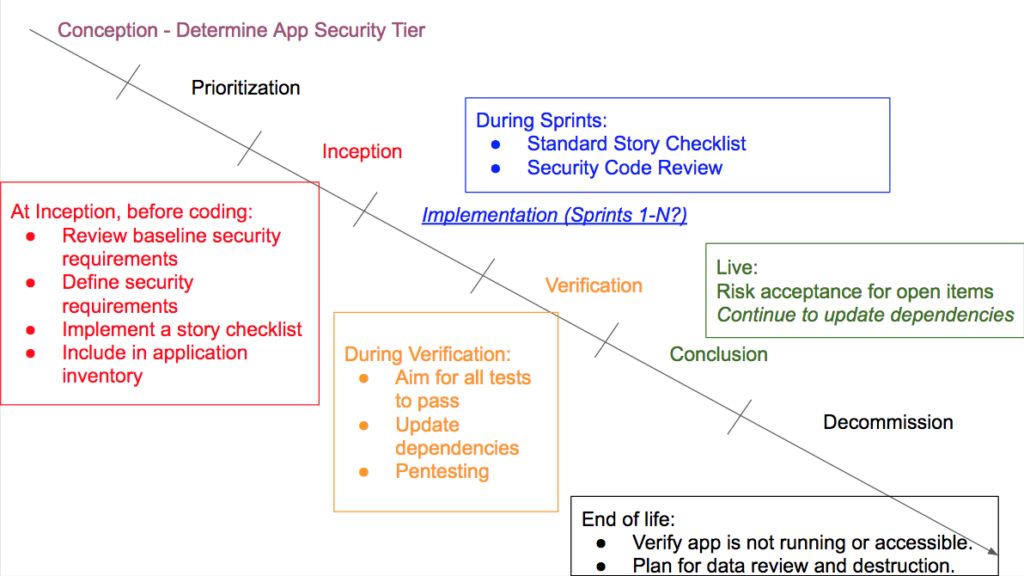

Here is a generalized high level example. The project timeline goes from the top left to the bottom right and the phases are represented by the segments. Then we overlay security activities for each phase.

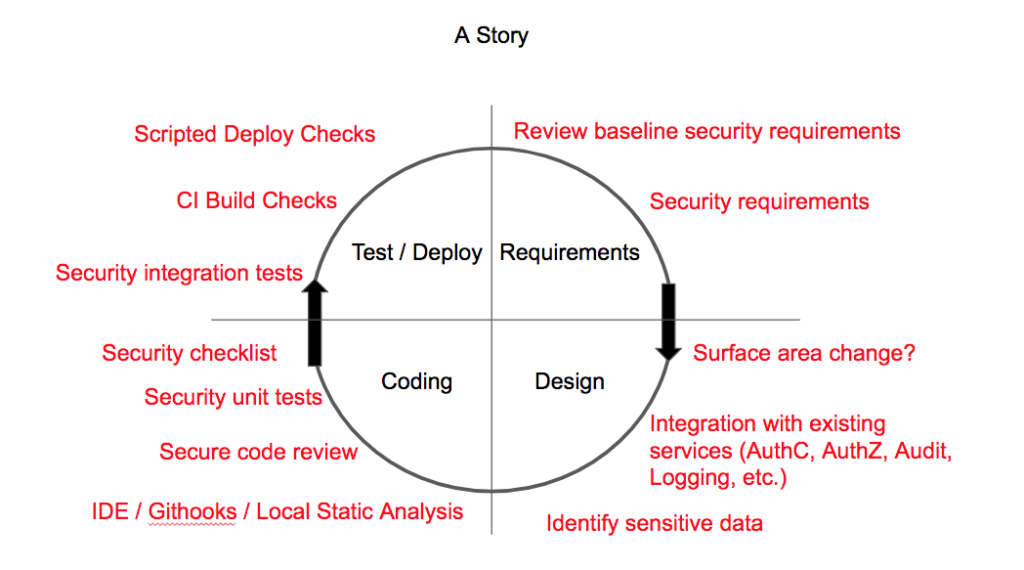

Here is an example of how we might represent a unit of work like a story and overlay security security activities.

It is very useful to have visual representations of the workflows to help communicate about what activities are expected when.

In theory, we can just identify best practices for security and everyone would do them. In reality, we are constrained by:

We usually try to understand what a team is capable of and where their strengths are. For example, some organizations are very focused on penetration testing. Others have established training programs. Others have strong static analysis tools. Again the variation is the rule. Therefore, our typical advice is to find the things that are realistic for your organization and then only overlay those onto your processes.

One important part of handling security within an organization is coming to an agreement across teams about how to discuss and manage risk. This inherently involves engineering, security, business stakeholders and potentially even executives up the chain. It is beyond the scope of this post to present a model for handling risk in general, but the presence of a process, engagement of the parties and a way to triage risks are important things to look for as you think about bringing risk into the conversation.

There are a variety of ways that requirements are captured. Sometimes they are in PRD’s (Product Requirements Documents). Sometimes they are in stories. The more we are able to explicitly capture security requirements, the better job we’re going to do meeting them.

Examples of security requirements on a story might include:

Again, we want to think about specific requirements in the context of each unit of work. One way to help do that is to introduce a villain persona that we can refer to for inspiration as we imagine the abuse cases and how things can go wrong.

That being said, we also advocate for Baseline Security Requirements. Baseline security requirements are requirements that exist across all of the stories for a given system. Often they apply across different systems or services. Examples of things covered in baseline security requirements might include:

Baseline security requirements are handled like Non Functional Requirements (NFR) which describe the operational qualities of a system rather than features. An example of an NFR might be that a system should be able to handle 100,000 concurrent users. This is something we need to know early and design for. We think of Baseline Security Requirements in the same way. If we present global expectations and constraints, it gives the developers the chance to choose appropriate frameworks, logging, identity, encryption, etc. as described above.

For both story and baseline security requirements, it is important that we identify these up front so that estimation includes security. When estimates include security, developers have a chance of meeting security expectations.

Generally speaking, we advocate for identifying security requirements at almost every organization we work with.

Throughout the development process, developers are continually adjusting major components, choosing 3rd party libraries, adding API endpoints and changing which systems interact with each other. When we can identify these types of changes and trigger a discussion, it allows us to identify the right times to talk about security.

For example, when we add a new organization API endpoint, we want to ask who we expect to call it and what data it should expose.

If we add a logging or metrics framework like NewRelic, we might want to think about what data gets shared with NewRelic.

If we start to build something with Struts, we may want to step back and note that the library has a security history.

Some organizations have existing architecture teams that essentially gate the design and development process with processes to ensure supportability, health checks, etc. This is a good place to inject design review. In some cases however, this is too coarse grained and we’d rather see weekly or monthly checkpoints on design.

Some organizations are test forward organizations. At these types of organizations, we advocate for security unit tests. Security unit tests can be written for all kinds of security conditions:

Generally, we recommend security unit/integration tests when organizations are already testing and their developers are capable of adding security tests. The more elaborate the business logic and potential risk of fraud or other abuse through authorization scenarios, the more likely we are to push unit tests.

On the other hand, many organizations will not be able to do testing either because they don’t have a test culture or because their developers may not take an interest in security.

At most clients, I recommend the following checklist for every story:

At one client, we built this custom checklist into TFS issues so that developers could literally check those four boxes as they confirmed that they had handled these scenarios.

Checklists will only be effective in organizations where developers can value them and are trained. That said, they are a low tech way to build security habits.

Code review is something that we do both in large projects with some clients, and on a PR by PR basis in GitHub with others and on our own internal projects. For organizations that use pull requests (PR’s) already, this can be a really good place to inject security. Developers need training and time to do this properly, but in this case their existing processes support a very low friction incremental security activity.

Static analysis is useful in some cases. Its usefulness varies based on the language and scenario.

We typically recommend starting with open tools for languages/frameworks where they are well supported. For example. bandit with python, brakeman with Ruby on Rails applications, sonarqube with Java apps. Once you know your teams and processes can work with open tools, consider more advanced commercial tools.

Some gotchas with static analysis include:

If your security team has a static analysis tool they like, consider integrating it gradually.

Continuous Integration is the process through which we automatically compile(build), test and prepare software based on many developers working on it concurrently. Throughout the day, the build server is working on each commit to continuously integrate changes and rebuild.

Jenkins, Travis, CircleCI and others help us to make sure our software can be build portably and is tested regularly. It helps us to identify problems as close to the point in time where we were coding them as possible.

Continuous Delivery takes CI a bit further and puts us in a position where we can deploy anytime – potentially many times a day. CD is beneficial in that it usually means that we can fix issues in production faster than an organization not practicing CD. That being said, it also suggests a level of automation that is conducive to additional security automation. We might check internal service registries and ensure all services are running under SSL as part of a post deployment check.

We always recommend integrating tools into CI. We contributed heavily to OWASP Glue for this type of integration work. This includes dependency checking, security unit tests and other types of more custom automation. Seeing a project where only one workstation can build the software is an anti-pattern. We also recommend continuous delivery because it means we can fix faster.

Most software is built upon a set of 3rd party libraries. Whether these are commercial or open source, sometimes there are vulnerabilities in the libraries we use. In fact, for attackers, these libraries are particularly nice targets because a vulnerability in a library gives me the ability to target N users of that library.

Thus, as an engineering group, we need to make sure that our dependencies don’t have terrible vulnerabilities in them that we inherit by building on top of them. We can do this by automating dependency checks. We like npm, retire, bunder-audit and dependency-check for doing this. We generally recommend using an open tool and building a process around it before adopting a commercial tool.

We also recommend having an explicit way to triage these items into tiers.

An often forgotten way to improve security is to take applications offline. Sometimes the cost benefit analysis just doesn’t support maintaining an application any more. Other times the application isn’t used at all anymore. Surprisingly often, these applications are still available and running – and not being updated. The easiest way to reduce the attack surface is to just turn off the application.

The real conclusion of all of this is that there are a lot of different ways to take security activities and integrate them into the SDLC. There is no one right way to do it. This post presented some of the processes we use to engage and the activities we recommend.